Code Generation: Comparing OpenAI's ChatGPT to Github's copilot

AI code generation is a field of artificial intelligence that uses machine learning algorithms to generate code automatically. One of the recent developments in this area is using newer natural language processing (NLP) models and AI to improve the accuracy and efficiency of code generation.

GitHub's innovative product, CoPilot, was launched in October 2021. CoPilot allows users to suggest code snippets based on user input using code comments and method skeletons. In addition, coPilot will suggest code improvements or completions like a very advanced auto-complete in your IDE.

The talk of the town has been chatGPT since its launch on the 30th of November 2022. ChatGPT has seen stellar growth, reaching one million users in just five days, a new world record. ChatGPT employs a GPT-3.5 language model variant to generate code based on natural language input. ChatGPT allows users to describe the code they want in a chat-like setting (hence the name), and the chatbot will generate the corresponding code in various programming languages.

With these two tools now being available, let's see what the fuss is all about! can these tools significantly improve the speed and accuracy of software development? But first, let's inspect them more closely.

ChatGPT

One of the main advantages of ChatGPT is its natural language processing (NLP) capabilities. This means developers can type out the code they want in plain English, and ChatGPT will generate the corresponding code.

Let’s look at a concrete example of what ChatGPT can do. Imagine you are in a situation where you want to classify Jira tickets into two categories - those that describe work on new features and those that describe maintenance.

so hot

Then, on a simple request, “Create a TensorFlow model for classifying Jira issue tickets into two categories: features and not-features”, the bot had a very comprehensive explanation:

To create a TensorFlow model for classifying Jira issue tickets into two categories: features and not-features, you will need to follow the steps below:

- Collect and label a dataset of Jira issue tickets. This dataset should contain a large number of Jira issue tickets, with each ticket labeled as either a "feature" or "not-feature" ticket.

- Preprocess the text data in the Jira issue tickets to prepare it for use in a machine learning model. This may include steps such as tokenizing the text, removing punctuation and stopwords, and lemmatizing the words to reduce them to their base forms.

- Split the dataset into training and testing sets. The training set will be used to train the model, while the testing set will be used to evaluate the model's performance.

- Train a machine learning model using the training set. In this case, a good choice for the model might be a convolutional neural network (CNN) or a recurrent neural network (RNN), as these are commonly used for text classification tasks.

- Evaluate the performance of the model on the testing set, and iterate on the model and the preprocessing steps until the desired level of accuracy is achieved.

- Once the model is performing well on the testing set, it can be used on new, unseen Jira issue tickets to classify them into the two categories: features and not-features.

Ok, so far, so good - a generic explanation of creating a neural network. Probably anyone trying to develop an ML solution for something would know that, but still - good job ChatGPT. But now the code:

1# Import libraries

2import tensorflow as tf

3from tensorflow import keras

4from keras.preprocessing.text import Tokenizer

5from keras.preprocessing.sequence import pad_sequences

6

7# Load data and split into training and testing sets

8data = ...

9X_train, X_test, y_train, y_test = ...

10

11# Preprocess data by converting text to numerical vectors

12tokenizer = Tokenizer()

13tokenizer.fit_on_texts(X_train)

14X_train = tokenizer.texts_to_sequences(X_train)

15X_test = tokenizer.texts_to_sequences(X_test)

16X_train = pad_sequences(X_train)

17X_test = pad_sequences(X_test)

18

19# Load pre-trained word embedding model

20embedding_model = ...

21

22# Build neural network model

23model = keras.Sequential()

24model.add(keras.layers.Embedding(len(tokenizer.word_index)+1,

25 embedding_model.vector_size,

26 weights=[embedding_model.wv.vectors],

27 input_length=X_train.shape[1],

28 trainable=False))

29model.add(keras.layers.Dense(128, activation='relu'))

30model.add(keras.layers.Dense(2, activation='softmax'))

31

32# Compile model

33model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

34

35# Train model

36model.fit(X_train, y_train, epochs=10)

37

38# Evaluate model on testing data

39model.evaluate(X_test, y_test)

40

41# Use model to predict classification of new Jira issue tickets

42new_tickets = ...

43new_tickets = tokenizer.texts_to_sequences(new_tickets)

44new_tickets = pad_sequences(new_tickets)

45predictions = model.predict(new_tickets)Pretty decent! The embedding model is missing, but no worries, you can always ask the bot to suggest something, and it will gladly help. Can you use ChatGPT and save money by not hiring a Junior Data Scientist? Probably not, but soon enough, many junior positions might be required to use this code generation technology to boost their skills and spend less time ‘being junior’.

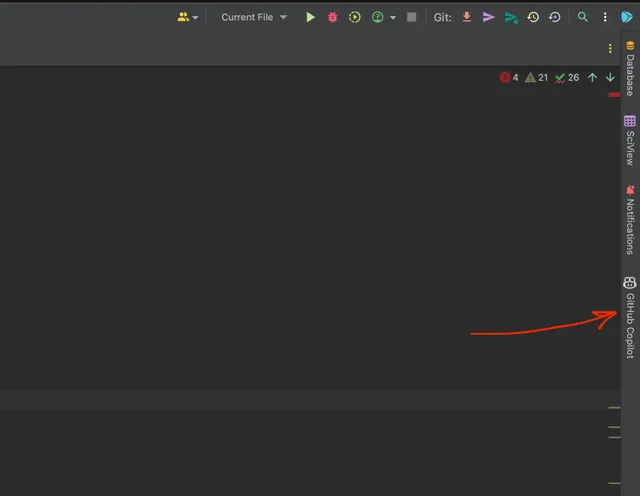

CoPilot

CoPilot is a natural language processing tool that helps developers generate code in real time by completing code snippets based on user input. It is designed to assist developers in completing simple tasks such as solving Leetcode problems (simple, yeah…) or debugging code. There exist multiple CoPilot extensions for many IDEs, including the most popular ones: JetBrains IntelliJ and VSCode.

What makes CoPilot special and unique is that it uses a machine learning model trained on GitHub data to understand the context of the conversation and provide appropriate code suggestions based on the user's input.

This makes CoPilot a valuable resource for developers looking to quickly complete development tasks that don’t require too much context around them - CoPilot can and does pick up the context of the code. Still, its NLP capabilities are limited compared to the ChatGPT regarding understanding context.

Let’s try to give CoPilot the same task we gave ChatGPT and see how it performs. Instead of writing a single sentence to a chat, we will have to describe what we want in a comment and wait for CoPilot’s code suggestions. The only things that were written here are lines 2 and 3. The rest was CoPilot’s suggestion (including comments).

1def create_model():

2 """Create a model that would be used for classification of Jira ticket into 2 categories: feature or not feature."""

3 # Load data and split into training and testing sets

generate using copilot

![Font Screenshot Software Terrestrial-plant Technology Darkness Multimedia Event"])](https://cdn.zensoftware.nl/384_f1b6988e_d119_4e34_b2a9_b461afa01c64_a54a0efb5e/384_f1b6988e_d119_4e34_b2a9_b461afa01c64_a54a0efb5e.webp)

pick a suggestion

1def create_model():

2 """Create a model that would be used for classification of Jira ticket into 2 categories: feature or not feature."""

3 # Load data and split into training and testing sets

4 data = pd.read_csv("jira.csv")

5 train_data = data.sample(frac=0.8, random_state=0)

6 test_data = data.drop(train_data.index)

7

8 # Tokenize data

9 tokenizer = Tokenizer(num_words=1000, oov_token="<OOV>")

10 tokenizer.fit_on_texts(train_data["Summary"])

11 word_index = tokenizer.word_index

12

13 train_sequences = tokenizer.texts_to_sequences(train_data["Summary"])

14 train_padded = pad_sequences(train_sequences, padding='post', maxlen=100)

15

16 test_sequences = tokenizer.texts_to_sequences(test_data["Summary"])

17 test_padded = pad_sequences(test_sequences, padding='post', maxlen=100)

18

19 # Define the model

20 model = keras.Sequential([

21 keras.layers.Embedding(1000, 16, input_length=100),

22 keras.layers.GlobalAveragePooling1D(),

23 keras.layers.Dense(24, activation='relu'),

24 keras.layers.Dense(1, activation='sigmoid')

25 ])

26

27 # Compile the model

28 model.compile(optimizer='adam',

29 loss='binary_crossentropy',

30 metrics=['accuracy'])

31

32 # Train the model

33 model.fit(train_padded, train_data["Feature"], epochs=30)

34

35 # Test the model

36 test_loss, test_acc = model.evaluate(test_padded, test_data["Feature"])

37 print('Test accuracy:', test_acc)

38

39 # Save the model

40 model.save("jira_model.h5")

41

42 return modelAnother fine implementation! The accuracy of each solution greatly depends on provided data and the quality of its preprocessing. Also hyper-parameter tuning. But on the surface, both AI-s gave good code suggestions that require further human editing.

Comparing Copilot to chatGPT two?

CoPilot and ChatGPT are two popular tools in the software development community, and at first glance, it might seem like they are direct competitors. However, a closer examination reveals that they have different code generation capabilities and serve different purposes, even though some overlaps exist.

CoPilot’s main task is code completion and helping developers boost their productivity by writing simple, repetitive code snippets for them. On the other hand, ChatGPT is a chatbot framework that allows developers to build and deploy chatbots using the GPT-3 language model. While it can assist developers with programming tasks, it is not primarily focused on code generation. Instead, ChatGPT is designed to help developers brainstorm ideas, understand complex concepts, and get guidance on best practices. It can be used similarly to seek answers on StackOverflow or have conversations with other software engineers.

ChatGPT can help you design large systems, providing you with a roadmap of how it can be done, while CoPilot will help you better suggest a concrete function implementation. For example: ChatGPT can develop a syntax for a new programming language, create context-free grammar, and recommend steps to implement a compiler. Conversely: CoPilot will help you create simple functions like parseExpression() by analyzing your project's structure and overall context.

Conclusion

In summary, both CoPilot and ChatGPT are valuable tools for software developers, but they have different code-generation capabilities and serve different purposes. For example, coPilot is geared towards code completion, while ChatGPT focuses more on inspiration and explanation and only then on code generation. By understanding the unique capabilities of each tool, developers can choose the one that best fits their needs.

Optimize with ZEN's Expertise

Upgrade your development process or let ZEN craft a subsystem that sets the standard.

Read more:

Can Google win the AI race by going Open Source? (making the 10bln OpenAI investment worthless)

A leaked document from an anonymous Google employee has sparked discussions about the impact of open-source AI projects ...

Code Generation: Comparing OpenAI's ChatGPT to Github's copilot

AI code generation is a field of artificial intelligence that uses machine learning algorithms to generate code automati...

Hallucinating AI - ChatGPT suggests APIs that never existed

I was debugging a piece of code that threw a Java.lang.OutOfMemoryError. After a short investigation, I spotted that the...

The $100 billion product demo failure: Google’s BARD

Since the launch of chatGPT, it’s been DEFCON 1 at Google HQ. ChatGPT could easily lead to a golden-egg-laying-goose kil...

Remote Coding Job Interviews are DEAD because of Nvidia and ChatGPT

With the rise of remote work, remote coding job interviews have become increasingly popular. However, this shift has bro...

What’s wrong with GA4, or how to find essential reports in Google Analytics

Google Analytics 4 (GA4) reporting has received some criticism and feedback from users since its release. Despite GA4 of...